“Learning”, “thinking”, “intelligence”, even “cognition”… Such words were once reserved for humans (and to a lesser extent, other highly complex animals), but have now seemingly been extended to a “species” of machines, machines infused with artificial intelligence or “AI”.

Part 1 of 3 – Machine Learning: The New AI Spring

In October 2015, a computer program developed by Google DeepMind, named AlphaGo, defeated the incumbent European champion at the complex ancient Chinese board game of Go. In March 2016, AlphaGo went on to defeat the world champion, Lee Sedol. This seminal moment caught the world’s attention, the media have since been incessantly covering every AI-related story, and companies from all walks of life have since been on a mission to add “artificial intelligence” to their business description.

At Platinum we have been closely following the major technological trends for many years. Kerr wrote about The New Internet Age three years ago, and we sought to highlight the disruptive and creative powers of digital technology in the feature articles republished in the 2014 and 2013 Annual Reports of Platinum Asset Management Limited. Our investment team have long tracked the development of mobile, 3D printing, bitcoin, electric vehicles (EVs) and autonomous driving, and many of these, such as semiconductors, mobile platforms, batteries and EVs have been key investment themes in our portfolios.

Artificial intelligence has also long been an area of interest to us, and this three-part paper will share some of the exciting recent developments. However, as investors, we need to keep in mind that such technological breakthroughs are the fruit of many years of ongoing research and typically have a long runway to commercialisation. While it is important to keep one’s finger firmly on the pulse, because these technologies are truly disruptive, it is important for investors to not lose sight of companies’ fundamentals and valuations amidst short-term excitement.

What Led to the New AI Spring?

AI research as a discipline began in the 1950s and went through several cycles of promising progress and disappointing setbacks. Many different approaches and techniques have been tested in trying to program machines to mimic human intelligence, from logical reasoning to symbolic representations, to knowledge-based expert systems. In the past two decades, a set of techniques known as machine learning gained significant traction. Each time we run a Google search, each time Facebook suggests a new friend, and each time Amazon recommends a product based on our purchase and browsing history, machine learning algorithms are behind the action.

Propelled by the convergence of ever-greater computing power, abundance of data and increasingly clever algorithms, advancement in machine learning accelerated in the past several years, producing one remarkable achievement after another, from self-driving cars to eloquent chatbots and, most notably, AlphaGo. These mind-blowing developments sparked intense interest as well as debate on AI’s impact on jobs, society, and even the human condition. At the heart of this new wave of AI technology and underscoring these debates is the growing ability of computers to learn and think as humans do, and from that, their potential to do much more.

What is Machine Learning?

Learning is “the process of converting experience into expertise or knowledge”[i]. A learning algorithm does not memorise and follow predefined rules, but teaches itself to perform tasks such as making classification and predictions through “the automatic detection of meaningful patterns in data”[ii].

Take machine translation as an example. Early translation programs are rule-based. The linguistic rules of both source and target languages are hard-coded into the system, which is like a massive multilingual dictionary combined with detailed grammar books. It translates each sentence by parsing the text according to its source language grammar book, looking up each word in its built-in dictionary and arranging the translated words in an order dictated by the syntactic rules in its target language grammar book. Rule-based algorithms often produce literal translations that fail to accurately capture the meaning of the original sentence as they cannot understand contexts, inferences and other semantic nuances that are not in the words themselves.

Modern translation programs like Google Translate are statistics-based learning algorithms (or hybrids that combine statistical translation with rule-based translation). They are given millions of examples of human translation and learn by identifying patterns in them. The difference between the two methods can be likened to adults learning a second language by memorising words and grammar versus children learning to speak by intuitively mimicking what they hear from others. Learning is also a “life-long” process for these algorithms – the more they are used, the smarter they get. This makes machine learning an apt method for dealing with tasks that require adaptivity where the changing nature of the problem means that no fast and fixed rule is sufficient for very long. Languages evolve over time. But instead of requiring humans to manually update the codes, learning algorithms will automatically pick up new words and phrases as they detect more and more live examples of them from our usage.

What is Deep Learning?

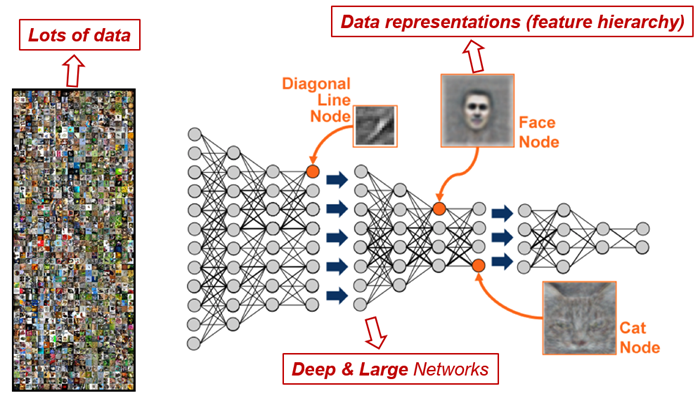

Deep learning is a particularly sophisticated category of machine learning techniques. It uses deep neural networks, which are algorithms and data architectures that simulate the workings of the complex, multi-layered networks of neurons in the biological brain.

From as early as the 1940s scientists began building artificial neural networks to mimic the structure of the human brain and emulate its cognitive processes. The earliest models consisted of a single layer of just dozens of artificial neurons or nodes. But our biological brains, with some 100 billion neurons on average, are far more complex. Advances in both neuroscience and computer science have now led to the development of artificial neural networks that have multiple interlinked processing layers (hence “deep”) and are more adept at modelling high-level abstractions in data.

For a machine to “see”, for example, an input image is processed through layers of neural networks organised in a hierarchical structure, each of which deals with an increasing level of abstraction[iii]. The first layer may determine the colour and brightness of each pixel; the next layer then uses this data to identify edges and shadows. Building on this information, shapes, scale, depth and angles are identified and analysed through subsequent layers before the combinations are categorised as eyes, ears, etc. A final output layer at last assembles all these features and recognises the image as a representation of, say, a cat or a human face.

Source: BREIL http://brain.kaist.ac.kr/research.html

Source: BREIL http://brain.kaist.ac.kr/research.html

Creating a set of deep neural networks is not enough; learning to “see” is a practice-makes-perfect process. Some researchers have employed supervised learning algorithms to “teach” the system using millions of labelled examples. Others experimented with unsupervised learning algorithms which learn to parse images and identify concepts and objects by discerning and classifying common features and patterns from unlabelled training data. A well-known example is the deep neural network system built by Google that taught itself to recognise cats and thousands of other objects by absorbing raw data from 10 million still shots of YouTube videos without any manually coded cues. The algorithm was not “told” to look for cats, nor did it get a tick each time it recognised one, in fact, it was not even told what a cat is. It decided for itself to conceptualise cat as a distinct category of object solely based on the statistical patterns of common features it had observed.

A Gateway to Other Traits of Intelligence

Learning is one of the multiple traits of intelligence that AI researchers originally set out to create in machines, along with other traits such as perception, knowledge representation, logical reasoning and planning. But, as illustrated in the example of how machines learn to “see”, what makes deep learning extraordinarily promising is its ability to unlock the door for machines to develop other areas of intelligence.

Perception

Vision and perception more generally has long been a great challenge for computer scientists. Because the ability to acquire information about our surroundings through our physical senses is so innate and yet complex, it is hard for us to know exactly how it is done and even harder to explain that process to a computer in a detailed and precise set of codes. Deep learning, as explained above, provided a way around the problem.

With millions of nodes and billions of connections between them, the algorithms and architectures available today are becoming more and more sophisticated. The Microsoft team that won the 2015 ImageNet Large Scale Visual Recognition Challenge used deep neural networks with up to 152 layers to achieve a record level of accuracy (less than 4% error)[iv]. To overcome the challenge of training very deep neural networks, they developed a new method called “deep residual learning”. Facebook’s facial recognition algorithm, DeepFace, also boasts of human level performance, with 97% accuracy[v]. Meanwhile, China’s largest online payment platform, Alipay, has introduced a facial recognition feature which claims to be capable of identifying users with 99.5% accuracy[vi].

Machine vision algorithms have far greater uses than auto photo-tagging on Facebook. They enable unmanned cars and drones to travel, detect clues from medical scans that are invisible to the human eye, and work as an important input mechanism to learn other things (see below on the example of AlphaGo). To turn image recognition on itself, other algorithms can produce images from what they “see” as well as generate original graphics. Researchers have even discovered ways to use “mind reading” algorithms to reconstruct facial images from human memory based on brain activity[vii].

Natural language processing

Understanding and communicating in natural language used to be the exclusive domain of humans, so much so that Alan Turing considered the ability of a machine to fool people into believing it is a human for the duration of a five minute conversation an apt test for intelligence. As described above, the complexity of natural language means that it is impossible to manually code the infinite number of inferences, nuances, ambiguities and contexts. But machine learning methods have significantly improved the quality of machine translations and transcriptions. Combining natural language processing with voice recognition, another technology significantly improved by machine learning, a host of voice-activated digital assistants and chatbots like Apple’s Siri, Google Now and Amazon’s Alexa have emerged, paving the way to a new world of utilities with speech as the main interface.

Viv, a virtual assistant developed by the creators of Siri, was shown to be capable of understanding commands from very complex – even convoluted – sentences, using a new AI technique called dynamic program generation: software writing software. The ability to generate multi-steps of codes in milliseconds allows Viv to decipher the context of speech and the speaker’s intent[viii].

Another impressive chatbot is Microsoft’s Xiao Bing (variously known as Xiao Ice or Little Bing), who takes on the persona of a Mandarin-proficient 17-year-old girl. Since her debut in 2014, Xiao Bing has been chatting with millions of users on China’s social media portals. These conversations show a remarkable level of emotive response. Xiao Bing can be cheeky or compassionate; she uses sarcasm and has a sense of humour; she even offers relationship advice. Her conversations now average 23 exchanges per session (compared to 1.5 – 2.5 exchanges for the average AI digital assistant), and many people said they did not realise she isn’t human until ten minutes into the conversation[ix]. Xiao Bing may have just passed the Turing Test!

Combining different AI capabilities, such as machine vision, voice recognition and natural language processing, we now have amazing applications that allow people to have real-time conversations in different languages as if the speakers themselves were bilingual, or that take a photo of a sign and translate the text embedded in the graphic, even apps that can help the visually impaired to “see” by seeing for them and describing in words what is going on around them. Microsoft’s app “Seeing AI” can recognise and describe objects and motion, even facial expressions[x].

Planning and navigation

In addition to visualising the world (machine vision) and make sense of the things in it (knowledge representation), computers are also “deep-learning” advanced planning and navigational skills, that is, the ability to formulate and execute strategies and action sequences to reach a goal in a dynamic, multi-dimensional environment. The combination of these multifaceted traits of machine intelligence has led to huge strides being made in the creation of autonomous vehicles (AVs) and advanced robotics.

With 360 degree vision, potentially no blind spots and faster reaction time, AVs have clear advantages over our more fallible human selves. But the nature of machine learning means that while it is good at improving performance from 90% accuracy to, say, 99.9%, dealing with the exceptional and the unpredictable “corner cases”, i.e. bridging the gulf from 99.9% to 99.9999%, remains challenging[xi]. This is why companies like Google and Baidu are all focusing first on perfecting their AVs in defined areas or fixed routes where they can ensure that the AVs have accurate maps and real-time road condition information.

Logical reasoning and problem solving

AlphaGo was not the first computer program that defeated the best human player at a game of skill – many others had done it before (noughts-and-crosses was mastered by computers in 1952, Hans Berliner’s BKG beat the world backgammon champion in 1979, IBM’s Deep Blue Supercomputer defeated world chess champion Gary Kasparov in 1997), but it took machine reasoning to a whole new level. Traditional algorithms deployed at board games typically relied on brute force to search for the best move by evaluating all possible moves. Such techniques could not have succeeded in Go, because the number of positions possible on the 19 x 19 grid of Go, some 10171 moves, is just too big, even for the most powerful computer[xii]. This is where deep learning came in. It enabled AlphaGo to develop a kind of “intuition”, i.e. exceedingly good predictive capabilities, which it used to decide which positions are worth evaluating before using other computational techniques such as Monte Carlo tree search to further investigate the selected pathways.

To enable AlphaGo to develop this intuition, its creators combined deep neural networks with another biologically inspired machine learning technique called “reinforcement learning”, which emulates a process performed by our brains that neuroscientists call “experience replay”[xiii]. Reinforcement learning is a semi-supervised learning algorithm. Instead of learning from labelled samples, it learns through experience and feedback and works particularly well in sequential decision-making. AlphaGo’s 12 layers of neural networks were first trained on 30 million moves from games played by humans. It then played thousands of games against itself. In this process of trial and error, its ability to select the next move and predict the outcome of the game improved as it continuously adjusted its millions of neural connections based on what worked and what didn’t, and it discovered new moves for itself[xiv].

Artificial General Intelligence

Besides its historic victory at Go, AlphaGo impressed and shocked the world by its self-taught mastery at a variety of videogames, such as Breakout, Pinball and Space Invaders. When first presented with the game screen, it was told nothing other than the goal, i.e. to maximise its score. Taking in only raw pixels as inputs, using reinforcement learning techniques, AlphaGo first figured out the rules of the game by itself, whether it is to knock off as many bricks as possible or to shoot as many flying aliens as possible while avoiding being shot. Its skills then grew exponentially through recursive self-improvement, and soon enough it achieved superhuman performance by not only carrying out moves with perfect timing and precision, but also planning moves in advance and devising far-sighted strategies.

The significance of this achievement lies in AlphaGo being the first “general purpose learning machine” that can learn to master a wide variety of tasks end-to-end[xv]. Artificial general intelligence (AGI) has always been the Holy Grail for AI researchers. In contrast to artificial narrow intelligence (ANI), which are systems that are programmed or trained to perform specific tasks or solve only certain types of problems, AGI refers to machines with the ability to perform “general intelligent action”[xvi] which encompasses all the basic building-blocks of human intelligence (such as the abovementioned capabilities like perception, reasoning, planning, etc.). The mission is to equip machines with the capacity to independently acquire knowledge and a wide range of skills from raw experience/data – or, in the words of DeepMind’s founders, to “solve intelligence” – and then use it to solve everything else. Neural networks and the raft of other machine learning techniques seem to point to a path to that goal.

Imagine the potential of machines with such advanced intelligence. Their superhuman abilities at analysing vast and complex data sets will revolutionise climate modelling and produce more accurate and longer-term forecasts than we can currently imagine, which in turn will transform agriculture and environmental science. In genomics and other areas of biochemical research as well as medical diagnosis and drug development, intelligent algorithms can generate insights and make discoveries that are beyond humans’ reach. In the more imprecise sciences of economics and finance, intelligent machines are likely better equipped than humans at making sense of the messy and fluid deluge of information that swamps us daily. In short, machine intelligence will impact every field of study, every industry, how we live and work, and the limits of our knowledge, in a big way.

By most expert accounts, however, we are still a long way, at least decades away, from creating true AGI. Before one starts to feel overwhelmed by the achievements and potential of machine leaning and before the dystopian sci-fi buff within us takes over, it is worth reminding ourselves that machine intelligence is still about silicon and maths.

[In Part 2 of this series, we will take a look at some of the business opportunities created by the application of machine learning and what tech companies are doing to take advantage of those opportunities.]

DISCLAIMER: The above information is commentary only (i.e. our general thoughts). It is not intended to be, nor should it be construed as, investment advice. To the extent permitted by law, no liability is accepted for any loss or damage as a result of any reliance on this information. Before making any investment decision you need to consider (with your financial adviser) your particular investment needs, objectives and circumstances. The above material may not be reproduced, in whole or in part, without the prior written consent of Platinum Investment Management Limited.